In the tutorial, we will take a look at XML sitemaps and their role in SEO.

A Bit of History #

In 2005 Google allowed webmasters to submit XML sitemaps via Webmaster Tools (today called Google Search Console) to make their sites more crawler-friendly.

What are XML sitemaps? #

An XML sitemap is a file that lists the URLs on your website.

It allows webmasters to include additional information about each URL: when it was last updated, how often it changes, and how important it is in relation to other URLs of the site.

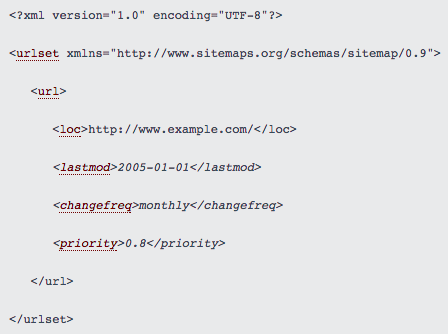

Below, you can see what the raw code for a standard XML sitemap entry looks like:

This information are used by Google and other search engines to crawl more efficiently your website.

Till 2011, there is some evidence that submitting a sitemap causes Google to crawl faster and speeds up the process of indexing pages.

… But Google has made major changes in the past eight years.

So, nowadays, websites really have SEO benefits from a sitemap file?

This is the question that I will attempts to answer in the following post.

Crawling #

If your site’s pages are properly linked, our web crawlers can usually discover most of your site.

Google created the sitemap.xml in 2005 to fix a problem – it exposed content that the search engines could not find otherwise.

This was a big deal – that was still in the era of sites which heavily used Flash, Silverlight, Java, and JavaScript for navigation and content.

Content that can’t be found by clicking around on a website is a problem sitemaps help to solve.

But… A few things have changed in the 14 years since the creation of the sitemap and most of the problems that sitemaps were meant to fix are no longer problems.

Google can run and read JavaScript.

Google can run and read Flash (besides which, Flash is walking dead: Even Adobe has finally given up on the security dumpster fire known as Flash Player)

Silverlight is dead.

Java in browsers is on life support (although it’s alive and well on servers and as the Android development language).

Web developers have learned how to make deep content and database-created pages easy to browse and to spider.

So it’s useless? #

Not quite. There are a few useful jobs for the sitemap.xml:

- Crawl orphaned pages

- Managing Crawl Budget

Trigger a Re-crawl After a Site Relaunch

Sometimes, pages end up without any internal links pointing to them, making them hard to find.

XML sitemaps help search engines to find these orphaned pages (pages that got left out of your internal linking).

According to Gary Illyes:

XML sitemaps are the second most important source of URLs to be crawled by Googlebot after hyperlinks and previously discovered URLs.

Google is huge, but it still needs to use its resources wisely. Google allocates a certain “budget” of its GoogleBot time to sites. It doesn’t necessarily crawl every page of your site at once. It often splits the site crawl jobs into batches; I’ve seen Google take weeks to finish crawling a site. So if your site has 5,000 pages, and you recently updated a dozen, you want Google to focus on this. You can update the modification dates for them in sitemap.xml, which signals to Google that it should focus its effort there.

If you relaunch a site and change many pages’ URLs, a sitemap help kicks off a re-spider and refresh search results to point to the new content and pages.

Indexation #

The pages that have been crawled by search engines can be added to their index, which then enables these pages to show up in the search results.

However, having a sitemap does not guarantee that Google will also index all pages available in your sitemap.

As Gary Illyes said:

submitting a sitemap doesn’t guarantee the pages referenced in it will be indexed. Think of a sitemap as a way to help the Googlebot find your content. If the URLs were not included in the sitemap, the crawlers might have a harder time finding those URLs and thus they might be indexed slower

Another thing you may want to pay attention to is that the algorithms may decide not to index certain URLs at all. For instance, if the content is shallow, it may be completely indexed or it will not be indexed at all.

In other terms, by submitting an XML sitemap to Google Search Console, you’re giving Google a clue that you consider the pages in the XML sitemap to be good-quality search landing pages, worthy of indexation. But, it’s just a clue!

Google does not index your pages just because you asked nicely. Google indexes pages because (a) they found them and crawled them, and (b) they consider them good enough quality to be worth indexing. Pointing Google at a page and asking them to index it doesn’t really factor into it.

Which pages exclude #

One common mistake that many people do is including all the indexable pages in the sitemap. Well, being indexable is not the only condition.

Must be Excluded from your sitemap:

- Non-caninical pages.

- Pages with query string values.

- Archived pages.

- Pages that result in a 301 or 302 redirect.

- 404 or 500 error pages.

- Utility pages.

- Pages blocked by your robot.txt file or pages with no-index directive.

Images and videos sitemaps #

Google sitemaps are not just for webpages. Google support sitemap extensions for images, videos, and news items as well.

If your site has a lot of rich media, these can be added to your existing sitemap or you can create a separate sitemap.

For example, if your site reaches some images with some JavaScript code, an image sitemap helps Google to find your images and discover them.

For most sites have an image sitemap is not essential except if your images are an important part of your strategy.

For example, if the purpose of your site is stock photography then I would recommend an image sitemap.

If you must have a sitemap… #

For a small website (less than 500 pages) the answer is technically no. Google will eventually find your content, but I strongly recommend that all sites, no matter what the size, use sitemap so you can maximize your opportunity for Google to discover content in an efficient manner.

Google in their documentation states:

In most cases your site will benefit from having sitemap and you’ll never be penalized for having one.

So basically, every website would definitely benefit from having a sitemap, it’s doesn’t hurt your SEO efforts, it doesn’t pose any risks, it just gives you benefits.

So why not use them.