In this tutorial, we will take a look at the robot.txt file, exploring its role in SEO, the process of creating a robots.txt file, and the recommended best practices.

What is a robots.txt file? #

According to Google:

A robots.txt file tells search engine crawlers which URLs the crawler can access on your site.

Robots.txt is a text file (hosted on the web server) that provides instructions to search engine robots or web crawlers regarding which pages or directories of a website should be accessed or excluded from crawling.

In cases where there is no robots.txt file or when the directives are not applicable, search engines will crawl the entire website without any restrictions.

As a bit of background: Crawling is when Googlebot looks at pages on the web following the links that it sees there to find other web pages. Indexing, the other part, is when Google systems try to process and understand the content on those pages, so they can be shown in the search results.

This is not a mechanism for keeping a web page out of Google

-

While the robots.txt file is generally respected by major search engines, it is possible for search engines to disregard certain sections or the entire file.

The robots.txt file merely provides optional instructions to search engines rather than serving as a mandatory directive.

If you want to hide the page completely from Search, use another method.

-

To effectively prevent a webpage from showing up in search results, it’s necessary to employ a meta robots noindex tag or password-protect the page.

You have, also, the option to remove these URLs from Google by utilising the URL removal tool in Google Search Console. However, it’s important to note that this action will only provide temporary hiding of the URLs. To ensure that they remain absent from Google’s search result pages, you must submit a request to hide the URLs every 180 days.

What is the robots.txt file used for? #

By utilising the robots.txt file, you have the ability to:

Block Non-Public Pages: there are pages on your website that should not to be indexed. For instance, you might have a staging version of a page or a login page that needs to exist but should not be accessible from the search engine. In such cases, you can utilise the robots.txt file to prevent search engine crawlers and bots from accessing these specific pages.

Maximise Crawl Budget: If you’re facing difficulties in getting all of your website’s pages indexed, it is possible that you are experiencing a crawl budget issue. By utilising robots.txt to block unimportant pages, you can allocate a greater portion of your crawl budget to the pages that are truly significant, allowing Googlebot to focus on indexing the content that matters the most.

Prevent Indexing of Resources: While meta directives can be effective in preventing pages from being indexed, they don’t work well when it comes to multimedia resources such as PDFs and images. In such cases, the robots.txt file becomes crucial in managing the indexing of these types of content.

Prevent Duplicate Content: Although the use of a canonical URL or meta robots tag can help prevent duplicate content, they do not address the specific issue of ensuring that search engines only crawl relevant pages. These methods primarily focus on preventing search engines from displaying those pages in search results, rather than controlling the crawling behaviour of search engines.

How to create robots.txt file? #

- Format and location rules:

- The file must be named robots.txt.

The name is case-sensitive, so get that right, or it won’t work.

- The robots.txt file must be located at the root of the website host.

Your site can have only one robots.txt file.

- If you already have a robots.txt file on your website, it’ll be accessible at

domain.com/robots.txt. - If you don’t already have a robots.txt file, open a blank .txt document -> save it as robots.txt and located the file at the root of the website host.

- If you already have a robots.txt file on your website, it’ll be accessible at

-

Use a separate robots.txt file for each subdomain.

For example, if your main website is sits at domain.com and your blog is sits at blog.domain.com. In such a case, you would require two robots.txt files. One should be placed in the root directory of the main domain, while the other should be placed in the root directory of the blog.

- The file must be named robots.txt.

How to write a robots.txt file? #

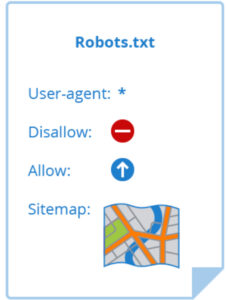

- User-agent

- Directives

- Disallow

- Allow

- XML Sitemap location

User-agent #

Every search engine is identified with a unique user-agent name.

Common search engine bot user-agent names are:

| Search engines | User-agent names |

|---|---|

| Googlebot | |

| Google Images | Googlebot-Image |

| Bing | Bingbot |

| Yahoo | Slurp |

| Baidu | Baiduspider |

| DuckDuckGo | DuckDuckBot |

If you want to prevent Googlebot from accessing and crawling your website. Here is how you can achieve it:

User-agent: Googlebot

Disallow: /

If you want to prevent all bots from accessing and crawling your website. You have the option to utilise the star * wildcard to assign instructions to all user-agents collectively. Here is how you can achieve it:

User-agent: *

Disallow: /

If you want to prevent all bots except Googlebot from accessing and crawling your website. Here is how you can achieve it:

User-agent: *

Disallow: /

User-agent: Googlebot

Allow: /

Basically, when a new user-agent is specified, it starts with a clean slate, disregarding any previously assigned directives. However, if you declare the same user-agent multiple times, all applicable directives are combined and followed.

Directives #

Directives are rules that you want the user-agents to follow.

Disallow and Allow

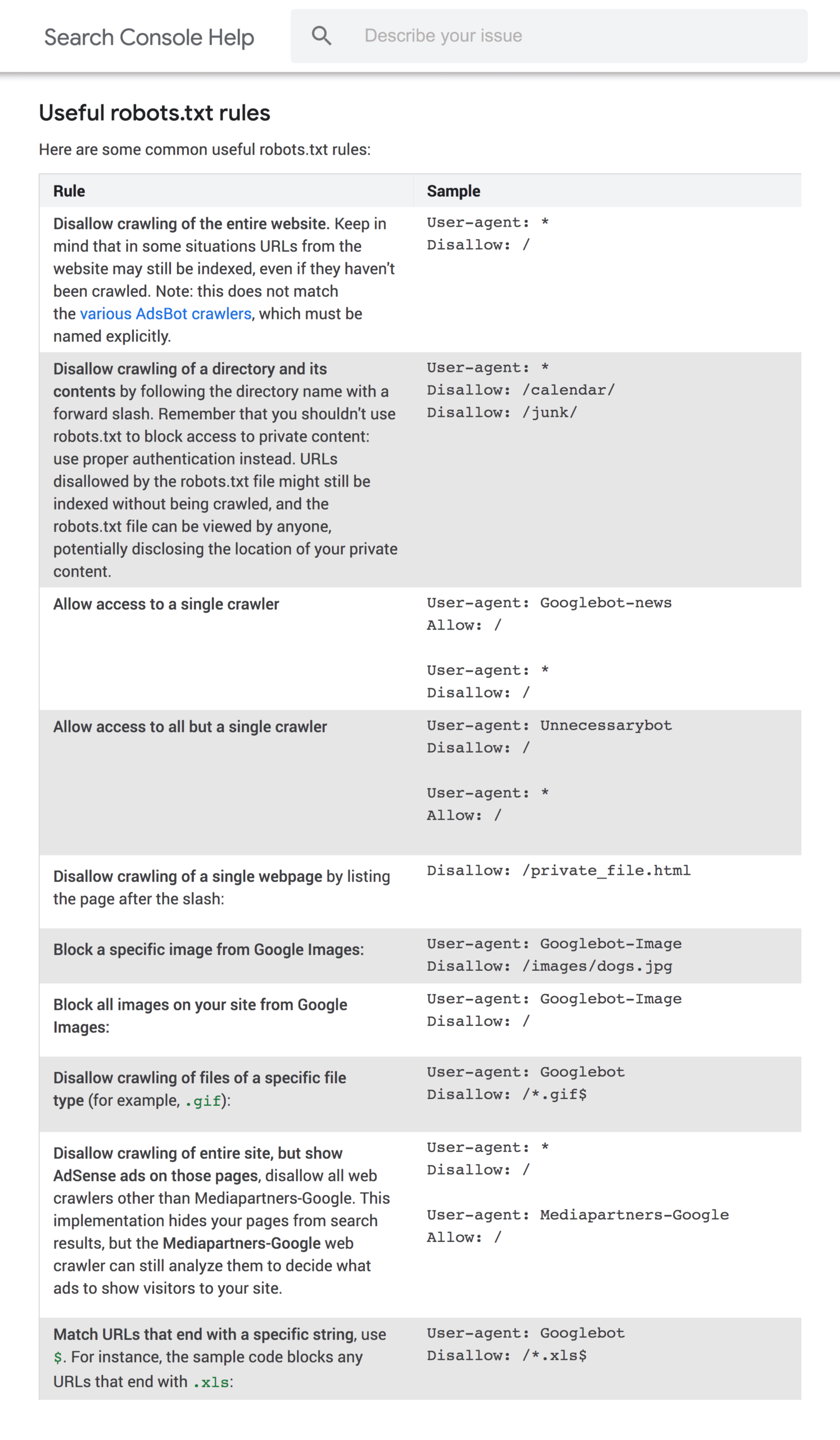

The Google-provided table below shows some supported Google directives to “disallow” or “allow” the bot from crawling different pages of your site.

💡 ABOUT CONFLICTING RULES

If not handled with caution, disallow and allow directives can easily conflict with each other.

In the example shown below, we’re disallowing access to /blog/ while allowing access to /blog.

User-agent: *

Disallow: /blog/

Allow: /blog

So, the URL /blog/post-title/ is simultaneously disallowed and allowed.

Therefore, which one takes precedence and is followed.?

-

For Google and Bing, the directive with the greatest number of characters takes precedence.

In this case, the disallow directive has more characters, so it takes precedence.

Disallow: /blog/(6 characters)

Allow: /blog(5 characters)When the allow and disallow directives have the same length, the directive that is less restrictive takes precedence and is followed.

In this case, that would be the allow directive.

-

For other search engines, the first matching directive takes precedence and is followed.

In this case, that’s disallow.

XML Sitemap location

This directive indicate the exact location of your sitemap(s) to search engines.

Note that you don’t need to repeat the sitemap directive multiple times for each user-agent. It doesn’t apply to only one. So you’re best to include sitemap directives at the beginning or end of your robots.txt file.

If you are not familiar with sitemaps, please refer to my previous article that discusses XML sitemaps.

Robots.txt file Example #

Below an example of a very basic robots.txt file for a WordPress website.

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://www.mauroromanella.com/sitemap.xml

Robots.txt file best practices #

Follow the following best practices to avoid common mistakes:

-

Use a new line for each directive

It is recommended to place each directive on a separate line to prevent confusion for search engines.

Bad:

User-agent: * Disallow: /directory/ Disallow: /another-directory/Good:

User-agent: * Disallow: /directory/ Disallow: /another-directory/ -

Use wildcards to simplify instructions

You can utilise wildcards (*) not only to apply directives to all user-agents but also to match URL patterns when specifying directives.

For instance, if you wish to restrict search engine access to parameterised product category URLs on your website, you can list them as follows using wildcards:

User-agent: * Disallow: /products/*?This directive prevents search engines from crawling any URLs within the /product/ subfolder that contain a question mark, which includes parameterised product category URLs like “/products/t-shirts?”, “/products/jacket?”, “/products/hoodies?”, and others.

-

Use “$” to specify the end of a URL

To signify the end of a URL, you can incorporate the “$” symbol. For instance, if you intend to restrict search engine access to all .pdf files on your website, your robots.txt file could be as follows:

User-agent: * Disallow: /*.pdf$So, in this example, search engine will be blocked from accessing “/file.pdf”, but they will still have access to “/file.pdf?id=test” since it does not terminate with “.pdf”.

-

Be as specific as possible

Let’s assume that you want to prevent search engines from accessing the

/de/subdirectory of your website.If we use “Disallow: /de”, the subcategory and all pages or files starting with “/de” (like “/designer-dresses/” or “/delivery-information.html”) will be excluded from the crawling process.

Instead “Disallow: /de/” (add a trailing slash) will exclude only the specific subcategory

-

Comment

Note: Comments are meant for humans only.

Utilise comments within your robots.txt file to provide explanations and clarifications for humans who read it.

Comments are preceded by a hash

#and can be positioned at the beginning of a line or after a directive on the same line.# Don't allow access to the /wp-admin/ directory for all robots. User-agent: * Disallow: /wp-admin/or

User-agent: * #Applies to all robots Disallow: /wp-admin/ # Don't allow access to the /wp-admin/ directory.

Reference and Useful Links #

- Robots.txt Introduction by Google: Link

- Create Robots.txt by Google: Link

- Robots.txt and SEO by Ahrefs: Link

- Robots.txt and SEO by Contentkingapp: Link