This article dives into Google Analytics 4's data modeling techniques. We'll explore behavioral, conversion, attribution, and predictive models—how they fill data gaps, impact your reports, and why Google's pushing them, with a focus on the growing importance of first-party data.

What is GA4 Data Modeling #

In Google Analytics 4, modeling refers to different types of machine learning techniques used to:

- Estimate missing user data (behavioral modeling)

- Reattribute conversions (conversion modeling)

- Assign conversions credit (DDA attribution modeling)

- Predict future user behavior (predictive modeling)

where observed data from user interactions and conversions may be missing or incomplete.

Observed Data vs. Modeled Data #

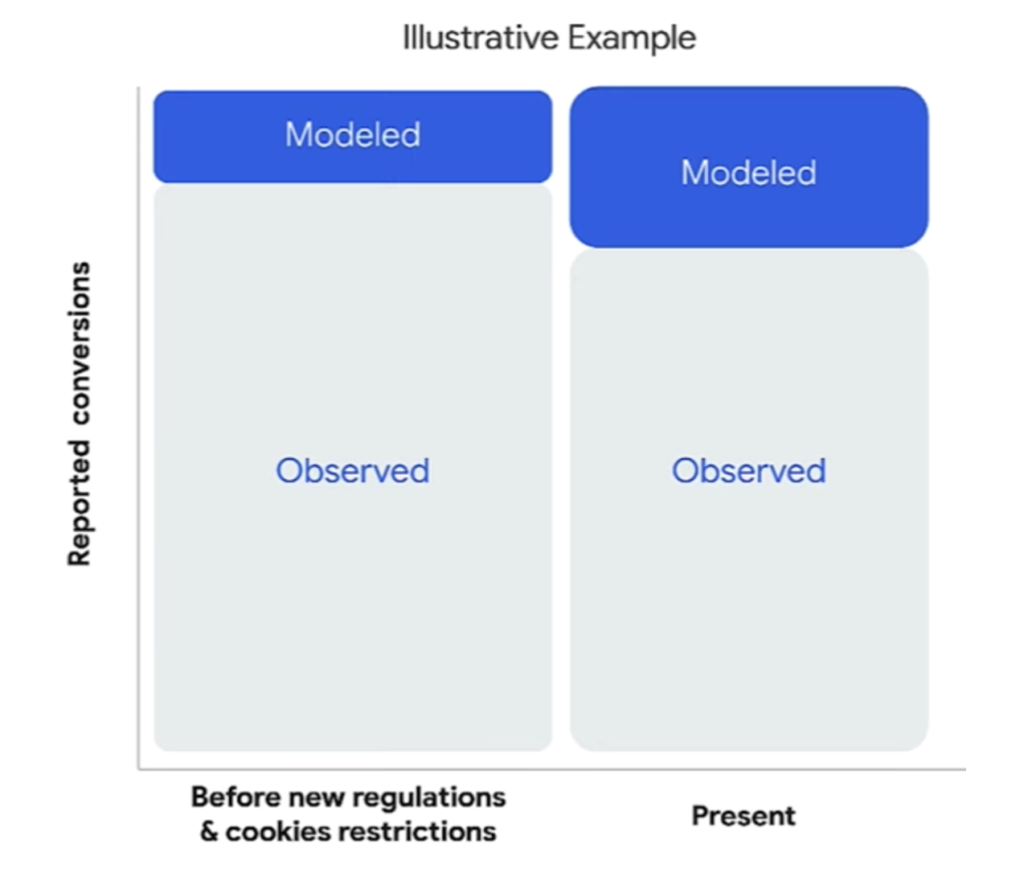

Traditionally, data in analytics has been categorised into two distinct types: observed data and modeled data.

Observed data is the lifeblood of analytics, representing the actual information captured directly from user interactions.

Modeled data, on the other hand, is not real data, but rather an estimate of what the data would be if it were available.

Google Analytics 4 (GA4) exemplifies this hybrid approach. It collects observed data through website and app activity, but it can also use that data to generate modeled data.

GA4 use a mix of observed data – where possible – and modeled data – where necessary.

This ensures a more complete picture of user behaviour even when direct measurements are not possible.

Why is Google Introducing These Models? #

The Shift Towards Modeled Data

Previously, we lived in a world of fully observable data, facilitated by tools like cookies and device identifiers. However, the measurement landscape has changed dramatically in recent years with the rise of privacy-focused browsers and the decline of cookies.

Regulatory changes: Regulations are impacting how user data can be captured and used.

Technology changes: Increased restrictions are impacting traditional data collection (e.g., third-party cookies and mobile ad identifiers).

These changes pose challenges for accurate data collection. According to an IAS 2021 Industry Pulse Report, 85% of digital media professionals cite cookie loss and accurate measurement as two of their top three challenges, with cross-device attribution being the third most significant concern.

In this new ecosystem, where change is the norm and the availability of identifiers is diminishing over time, the reliance on conversion modeling to provide comprehensive measurement reporting will continue to increase.

Why Google Matters? #

My personal opinion (coming from my cynical perspective):

I believe, the primary reason behind these changes is to ensure that advertisers can see a higher or at least stable ROI from their advertising spend on Google platforms.

Do you remember ATT (App Tracking Transparency) from Apple? A couple of years ago, if you got an iPhone, you would have received a prompt asking to allow tracking.

Meta reported that they lost $10 billion due to this feature. It’s not because it impacted their advertising ability itself, but because it impacted Meta’s ability to measure that effectiveness.

Advertisers need to see a clear link between their spend and the resulting conversions. If they can’t measure this, they’re likely to cut back on their advertising budgets — a scenario that Google, a company that generated $230 billion in advertising revenue last year, wants to avoid at all costs.

Google has learned from Meta’s experience that providing accurate measurement of advertising return on investment (ROI) is crucial. For this reason, Google has released this suite of modeling techniques – conversion, behavioural, data-driven attribution, and predictive modeling. These models prevent advertisers from perceiving a lower ROI or higher cost per acquisition (CPA), despite obstacles to tracking user data.

Note: Google Analytics is shifting from a data tool to a marketing tool, focusing more on driving marketing strategies rather than just analysing data.

The GA4 Models Explained #

Here’s a breakdown of these models and their pros and cons.

Behavioural modeling #

Filling the Gaps

Behavioural modeling predicts the behavior of users who decline analytics cookies based on the behavior of similar users who accept analytics cookies.

According to Jaime Rodera “Behavioral modeling drove a 23% increase in the observable traffic in analytics reporting on European and UK websites.”

Purpose

To fill gaps in user behaviour data across various touchpoints, especially when users have not consented to analytic storage cookies.

Challenges Addressed

This is where we have to introduce the concept of something called consent mode.

What is Consent Mode?

Consent Mode is another Google feature that restricts data collection for users who opt out of analytic storage cookies.

While it limits data collection, it doesn’t completely block it; some anonymized data is still collected from users who opt out. This anonymized data, combined with data from consenting users, is used in behavioural modeling.

By analysing these combined datasets, behavioural modeling can identify patterns and trends, allowing it to predict the missing user behaviour for those who opted out.

Pros:

Cons:

Conversion modeling #

Reattributing Conversions

Purpose

Conversion modeling aims to attribute conversions to the “correct” marketing channels, particularly those that appear as “direct” traffic in Google Analytics.

Challenges Addressed

In Google analytics, Direct traffic often signifies traffic without a referrer, which could mean users navigating via bookmarks or typing URLs directly but even clicks from untracked sources (e.g. inbound links without UTM parameters).

This is problematic for advertisers because direct traffic is uninvestable — it lacks actionable data about the user’s journey.

Conversion modeling reassigns these conversions to more specific channels (often where Google ads might play a role 😉).

Note: Conversion modeling will not change the total number of conversions collected by Google Analytics 4, but it will change the channels that those conversions are attributed to.

Example of Impact:

CHANNEL |

BEFORE CONVERSION MODELING |

AFTER CONVERSION MODELING |

|---|---|---|

| Direct | 40 | 20 |

| Paid Search | 40 | 60 |

| Total | 80 | 80 |

Imagine you spent £1000 on a paid search campaign. You see 40 conversions from the campaign and another 40 from “direct” traffic, giving you a cost per conversion of £25 (£1000 / 40 conversions).

Conversion modeling steps in and reallocates 20 of those “direct” conversions to your campaign. Now, you have 60 conversions from the campaign, reducing your cost per conversion to £16.67 (£1000 / 60 conversions).

Pros:

Cons:

Attribution modelling (DDA) #

Assigning Conversion Credit

For more details about this model, refer to my dedicated post.

Attribution modeling, particularly data-driven attribution (DDA), assigns conversion credit across various touchpoints in the customer journey.

Purpose:

Give marketers a more accurate view of how different channels and touchpoints influence conversions, rather than just looking at the last click.

Challenges Addressed:

Traditional last-click attribution models often overlook the contribution of earlier touchpoints. DDA uses machine learning to distribute credit more accurately across all interactions, providing a holistic view of marketing effectiveness.

Pros:

Cons:

Predictive modeling #

Forecasting the Future

Purpose

Predictive modeling forecasts future outcomes based on historical data and trends.

Challenges Addressed:

Beyond understanding past and present conversions, predictive modeling allows advertisers to anticipate future performance, optimising their strategies proactively.

Pros:

Cons:

Conclusions #

The Importance of First-Party Data #

In today’s marketing landscape, artificial intelligence (AI) and machine learning (ML) models are playing an increasingly prominent role. Platforms like Google Analytics 4, Meta, and Microsoft are all utilising these advanced technologies to analyse user data, model behaviour, attribute conversions, and optimise ad performance.

However, this heavy reliance on AI/ML raises some important questions about transparency and objectivity.

With so much data processing and modeling happening behind the scenes, it can be difficult to understand what you’re truly looking at.

What is it I’m looking at?

Are these models presenting an accurate reflection of reality, or are they designed to show the platforms’ services in the best light possible?

This lack of clarity is concerning, especially when you consider that major ad platforms aren’t exactly known for their transparency. There’s a risk that the data could be manipulated or skewed to encourage more ad spend on their networks.

Do you trust Google to mark their own homework?

Without first-party data, you’re left blindly trusting that Google, Meta, Microsoft, and others are accurately representing performance metrics and optimising campaigns in an unbiased way. But with your own data warehouse or repository, you can apply AI/ML models yourself or scrutinise the platforms’ methodologies.

Of course, establishing a first-party data strategy is easier said than done. Many brands and businesses still don’t have a centralised data hub or the technical resources to effectively utilise advanced modeling. But you can’t even think about that until you at least have the data.

In this new AI/ML-centric marketing reality, having ownership and control of your customer data is paramount. While the leading ad platforms are already wielding these technologies, the real power comes from developing your own robust, first-party data strategy. Only then can you truly understand what the models are telling you and ensure your marketing efforts are optimised objectively.

Vidhya Srinivasan (Google’s VP of Ads Buying, Analytics and Measurement) put it this way:

“The future is based on first-party data. The future is consented. And the future is modeled.”